In recent months we have actively invested in the resilience of our company. This blog post deals with how one of those investments helped us with a recent incident in which database activities failed.

Form submissions

WebFlow feeds both our customers and their customers. For many companies, sales pipelines are dependent on incoming form transit, so the availability and sustainability of this function are crucial.

A typical form entry comes in the WebFlow -API, goes through spam controls and continues to exist on the customer’s site. Customers can view and export these submissions in their web flow dashboard. Often customers will prepare Webhooks with these forms to their own internal systems, so that they can take adapted actions with new entries.

Design goals

We wanted to ensure that, even during electric malfunctions, submissions would be:

- Durable: Never lost, even if the database persisted

- Non-blocking: Recovery mechanisms may not delay the critical request path

- Idempotent: Safe to play again without making duplicates

- Operable: Easy to target a single customer or to perform system -wide

Phase 1 writingbackups

The first question we had to ask was, where should backups live? We wanted enough context to play correctly, without adding electricity dependencies that could fail before a backup was recorded.

We chose to say this as high as possible in the API layerAfter basic middleware, but before database calls were made. By giving priority to back -up availability, we have chosen to save backups for all Entries, even those who would later be filtered as spam, as well as those who would be successfully taken.

We have decided to use Amazon S3 to save these backups. Like any distributed system, we had to consider what would happen if the back -up request itself failed, and whether it should be a blocking network interview. The priority was to serve the form entry in the critical path; Protecting live traffic has priority over back -up success.

Phase 2 – Repeat

With backups persist on disk, we then started building a system where we could re-process the forms in the case of a power-racing malfunction that would otherwise not be able to be successfully stored in the database. We have mentioned this system re -play. There were two modes in which this operation had to be performed: for each customer repetition and global repetition.

Mode 1 – repeated per site

This way of re -playing would be performed in the case of a single site or a handful of sites that must be played individually. This would be a faster, targeted approach to repeat a forms entry by a single customer.

Modus 2 – Global Replay

This operation would be the heavier way to perform this operation and would cross backups for all customers and play them again across the board. This operation would be slower, but would be used in a systemic malfunction by a large part of the customers.

Submission Hashes

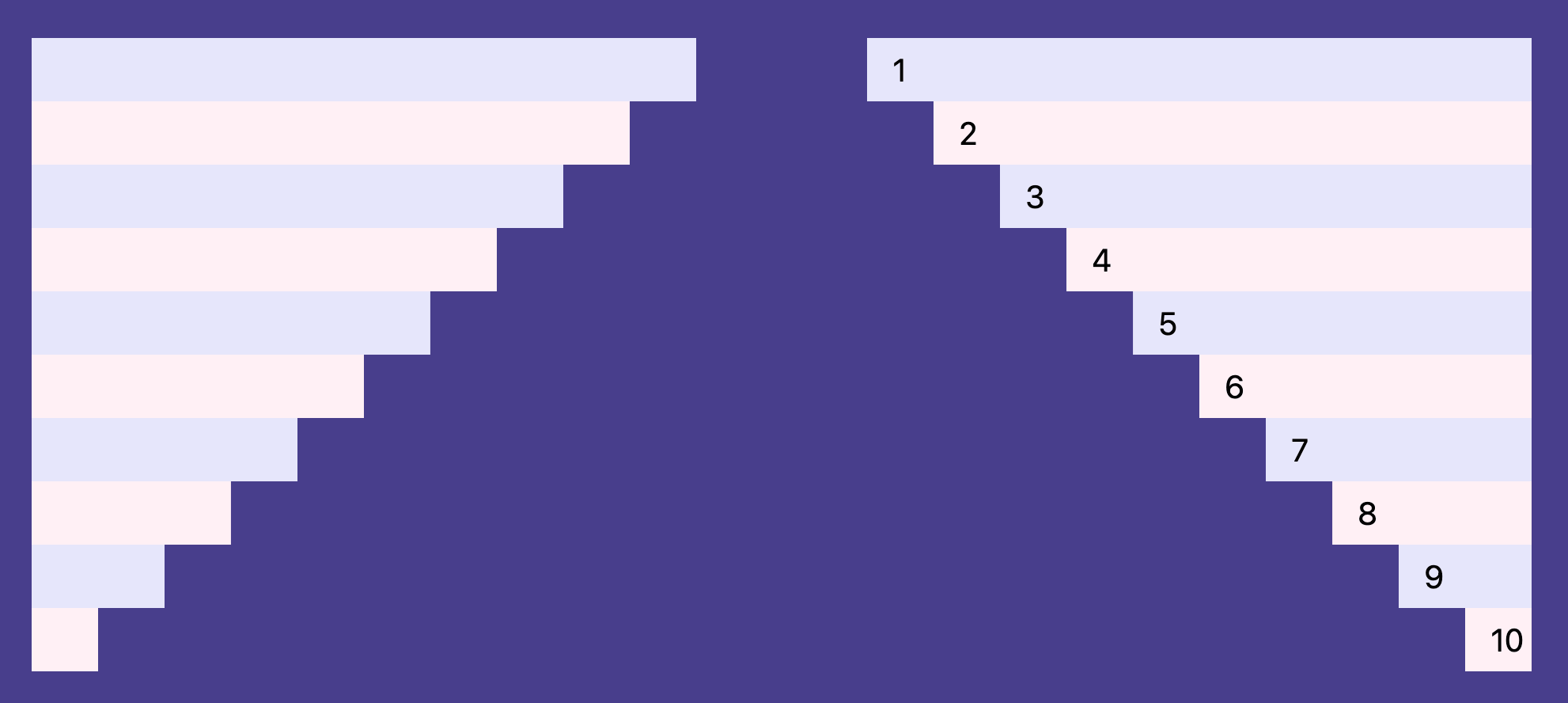

Packouts rarely fail 100% of the requests. A typical pattern looks like:

✅⛔⛔⛔⛔⛔⛔⛔⛔⛔⛔⛔⛔⛔

In such a way that ✅ represents a successfully processed entry and ⛔ a represented that failed before it was stored in the database. We wanted to ensure that if we were played again from start to finish, we had not taken the ✅ forms that had already been taken.

To do this, we have decided to implement a concept that is called Submission Hashes. Each entry of the form is displayed as a unique, stable hash, where the SHA-256-Hash represents everything about the form entry that makes it unique-the time stamp The entry came into our system, customer data, the form entry body, Metadata and more. These hashes are selected in the critical path for Every submission of the formRegardless of the repeat context.

We have also stored and added a database index on the field, so that we could efficiently ask for the submission of forms by entry Hash to determine whether the submission of the form has already been successfully taken.

What this means is that by concluding entry -offshes, we were able to build an idempent repetition process, so that we could play submissions over a wide range and ensure that the only delta of re -played entries were only those who were not successful the first time. It also meant that when choosing the time window to play again, we could safely choose time stamps for the malfunction and well after the malfunction, to ensure that we cover the entire range, without worrying about scanning about records that we have already taken.

const isDuplicate = await checkIfDuplicateFormSubmission({

siteId,

workspace,

body,

createdOn,

});

if (isDuplicate) {

return;

}Chaos tests

In addition to unity and integration tests, we had to simulate a real malfunction to know that this tool was working. To achieve this, we conducted a test on our staging environment in which we deliberately broke form entails. We did this by throwing a non -special in the intake path of the form -in -handed, simulating a standard failure.

Then we tried to submit different forms that, as we expected, did not process correctly. We then implemented a build that has established the environment (simulating a return of a breaking change). Then we had our Replay scenario: form entrepreneurship that were missing in the customer database but are present in our backups.

From there we have carried out the refresher course. First outside the failure window to confirm that nothing has happened. We then held the task square in the fault window to prove that we were able to play missing submissions again. We then performed a repeat rink that spans before and after the failure period, which showed that only missing submissions were played. Finally, we have carried out the refresher course several times, which shows that the operation was the same and that entryhasheshes were duplicated at the submission.

After this we were confident that this was a tool that we could use in an incident.

Spam considerations

Shapes attract spam. We use multiple defenses with the network and application layers. One app-layer check depends on a downstream token with a 1 hour TTL (time to live); That signal cannot be trusted during longer incidents. Replay falls back to the other layers, but we have chosen “More information about less“With recovery, some extra spam can reach customers in certain replay scenarios.

Incident management

During our Recent incident We were in a situation where this resilience work was tested. Database writes failed and we had to assess whether repeating work should be done.

To evaluate this, we looked at our statistics. In general, there must be one back -up job per form entry stored; The expected ratio during normal traffic is loosely 1: 1. In the case of a disruption of the services, however, statistics would show a gap between back -volume and persistent submission volume, indicating that playing work were done again.

We had a runbook, monitors and the muscle memory to have done this earlier. So we went through the state of the world and we came up with a plan for performing a global replay operation.

The scale of the problem and the need for repetition was considerable, with a few hours of backups to continue. Fortunately, the replay operation went without a towbar, she pushed millions of back -ups and the forms entries correctly run over our customers.

What is the following

There is more to do here.

- Move Back -up before In the pile to reduce windows for backup

- Smarter spam filtering During repetition and in the critical path

- Faster, cheaper repetition: Streaming pipelines, back pressure and more detailed distribution to reduce the calculation, while retaining existing idem potence and resilience

Conclusion

Losing data from customers is catastrophic. Resilience in this area is crucial for our customers, so it is essential to have systems to perform data recovery. Just as critical is having a runbook and muscle memory to make these systems work safely. We take service stops very seriously. When they occur, we give priority to safe, verifiable recovery. In this incident we found nearly a million supported entries and delivered them to the correct recipients.

Does building resilient distributed systems sound interesting for you? If so, Come and work with us!

#Form #Function #Construction #resilient #form #entry #scale #WebFlow #Blog